A few hours in GIMP - Whenever I start painting, there's always a fight between keeping it realistic, or heading off in some surreal tangent pic.twitter.com/JGd0F7wfT6

— oioiiooixiii (@oioiiooixiii) December 28, 2016

A few hours in GIMP - Whenever I start painting, there's always a fight between keeping it realistic, or heading off in some surreal tangent pic.twitter.com/JGd0F7wfT6

— oioiiooixiii (@oioiiooixiii) December 28, 2016

I dont understand the 2016 hate. Seems to be a USA/Eurocentric thing; every year since 2007 has been "bad" in that case. But I'll play along

— oioiiooixiii (@oioiiooixiii) December 31, 2016

Start of 2016 [mouth.jpg] - End of 2016 [anus.jpg]

— oioiiooixiii (@oioiiooixiii) December 31, 2016

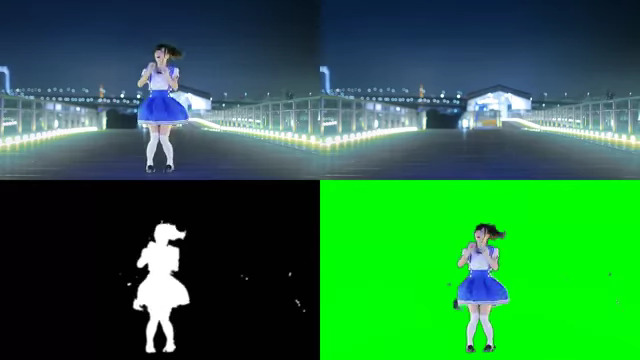

ffmpeg \

-i background.png \

-i video.mkv \

-filter_complex \

"

color=#00ff00:size=1280x720 [matte];

[1:0] format=rgb24, split[mask][video];

[0:0][mask] blend=all_mode=difference,

curves=m='0/0 .1/0 .2/1 1/1',

format=gray,

smartblur=1,

eq=brightness=30:contrast=3,

eq=brightness=50:contrast=2,

eq=brightness=-10:contrast=50,

smartblur=3,

format=rgb24 [mask];

[matte][video][mask] maskedmerge,format=rgb24

" \

-shortest \

-pix_fmt yuv422p \

result.mkv

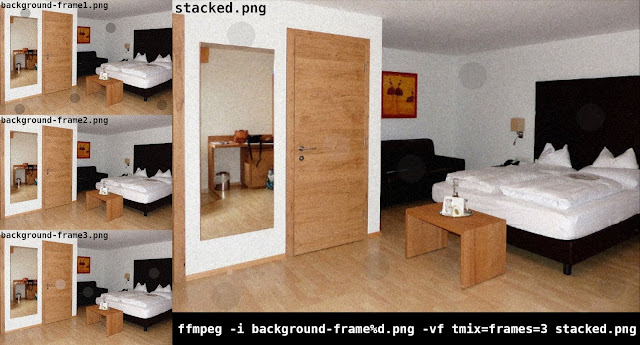

The image-stacking process is just to create a cleaner background image to work with. The idea is to remove momentary anomalies by averaging frames together. The benefits may be negligible though. Image-stacking can be done many ways. I created a quick demo for you using FFmpeg:

— oioiiooixiii (@oioiiooixiii) November 4, 2019

# Image stacking with FFmpeg usinf 'tmix' filter. # More info on 'tmix' filter: https://ffmpeg.org/ffmpeg-filters.html#tmix ffmpeg -i background-frame%d.png -vf tmix=frames=3 stacked.png # Image stacking is also possible with ImageMagick convert *.png -evaluate-sequence mean stacked.png

# Generate video motion vectors, in various colours, and merge together

# NB: Includes fixed 'curve' filters for issue outlined in blog post

ffplay \

-flags2 +export_mvs \

-i video.mkv \

-vf \

"

split=3 [original][original1][vectors];

[vectors] codecview=mv=pf+bf+bb [vectors];

[vectors][original] blend=all_mode=difference128,

eq=contrast=7:brightness=-0.3,

split=3 [yellow][pink][black];

[yellow] curves=r='0/0 0.1/0.5 1/1':

g='0/0 0.1/0.5 1/1':

b='0/0 0.4/0.5 1/1' [yellow];

[pink] curves=r='0/0 0.1/0.5 1/1':

g='0/0 0.1/0.3 1/1':

b='0/0 0.1/0.3 1/1' [pink];

[original1][yellow] blend=all_expr=if(gt(X\,Y*(W/H))\,A\,B) [yellorig];

[pink][black] blend=all_expr=if(gt(X\,Y*(W/H))\,A\,B) [pinkblack];

[pinkblack][yellorig]blend=all_expr=if(gt(X\,W-Y*(W/H))\,A\,B)

"

# Process:

# 1: Three copies of input video are made

# 2: Motion vectors are applied to one stream

# 3: The result of #2 is 'difference128' blended with an original video stream

# The brightness and contrast are adjusted to improve clarity

# Three copies of this vectors result are made

# 4: Curves are applied to one vectors stream to create yellow colour

# 5: Curves are applied to another vectors stream to create pink colour

# 6: Original video stream and yellow vectors are combined diagonally

# 7: Pink vectors stream and original vectors stream are combined diagonally

# 8: The results of #6 and #7 are combined diagonally (opposite direction)

**** Wed Jul 6 17:02:07 IST 2016 **** ARRAY VALUES: |10||||||||200|||0|||1|-50|0|||| vidstabdetect=result=transforms.trf:shakiness=10:accuracy=15:stepsize=6:mincontrast=0.3:tripod=0:show=0 vidstabtransform=input=transforms.trf:smoothing=200:optalgo=gauss:maxshift=-1:maxangle=0:crop=keep:invert=0:relative=1:zoom=-50:optzoom=0:zoomspeed=0.25:interpol=bilinear:tripod=0:debug=0 # Info: # 1: Time and date of specific filtering. The filter choices of each run on a video gets added to the same log file. # 2: Clearly shows the user specified values for filtering (blanks between '|' symbols indicate default value used) # 3: Filtergraph used for first pass # 4: Filtergraph used for second pass

# Using the FFmpeg compilation method for GNU/Linux, found here # https://trac.ffmpeg.org/wiki/CompilationGuide/Ubuntu # Add (or complete) the following to pre-compile procedures # --------------------------------------------------------- cd ~/ffmpeg_sources wget -O vid-stab-master.tar.gz https://github.com/georgmartius/vid.stab/tarball/master tar xzvf vid-stab-master.tar.gz cd *vid.stab* cmake . make sudo make install # --------------------------------------------------------- # When compiling FFmpeg, include '--enable-libvidstab' in './configure PATH' # Create necessary symlinks to 'libvidstab.so' automatically by running sudo ldconfig

"The directive came down that nobody was to discuss drugs and Michelle Smith on national television" source: http://community.seattletimes.nwsource.com/archive/?date=20000814&slug=4036702Michele Smith's Olympic victories stand, as she never tested positive for banned substances during the 'Games. Whatever really went on with drug-taking, it's hard to forget the vitriol shown by the loser-Americans of 1996. "She broke the rules" a bitter, twisted-faced, Janet Evans whined. As we all know: if you beat the Americans at something, they will come after you until they find a way to destroy you. That case of "sample tampering" of 1998 still seems suspicious; a tad convenient; like they couldn't catch her with the drugs, so they spiced up the samples themselves. Everyone cheats in the end.

Show me the athlete, and I'll find you the substance.*Partial content originally published: August, 2012

— oioiiooixiii (@oioiiooixiii) March 24, 2016

for i in {0..9}; do echo "WONDERFUL $i"; done \

| toilet --gay \

| ffmpeg -f tty -i - tty-out.gif

# — oioiiooixiii {gifs} (@oioiiooixiii_) June 15, 2016

# Basic syntax for html output toilet -f smmono9 --html oioiiooixiii

# Strips out <br /> tags to improve formatting in blogger

htmlText="$(toilet -f smmono9 --gay --html oioiiooixiii)"

echo "${htmlText//"<br />"/""}"more info: http://libcaca.zoy.org/toilet.html# Twelve stacked 'tblend=all_mode=difference128' filters.

# Deblocking 'spp' and 'average' filters used to mimimise strobing effects.

# Due to latency issues, the result in ffplay will differ from a ffmpeg rendering.

ffplay \

-i video.mp4 \

-vf \

"

scale=-2:720,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

spp=4:10,

tblend=all_mode=average,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

spp=4:10,

tblend=all_mode=average,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

spp=4:10,

tblend=all_mode=average,

tblend=all_mode=difference128,

tblend=all_mode=difference128,

tblend=all_mode=difference128

"

Woke to radio this morning; someone saying "Putin is responsible". Dont know what he's responsible for this time; jumped out of bed laughing

— oioiiooixiii (@oioiiooixiii) July 19, 2016

context: http://www.irishexaminer.com/archives/2016/0722/opinion/ban-all-russian-athletes-at-rio--russian-doping-411674.htmlPutin has a big needle filled with the dope juice. He jabs it in the athletes bum-bums whenever they visit the Kremlin.

— oioiiooixiii (@oioiiooixiii) July 20, 2016

Jen hasn't smiled since March 24th. Well, a smile or two perhaps, but no laughter; not like before. No, things are definitely different now. Chilled, and tense; like frozen beef. I think the end is in sight, and they're all just going through the motions. Strange how the wind blows tonight.context: https://twitter.com/oioiiooixiii/status/742822155713425409

# MoreDPI - increase resolution beyond monitor limits alias moredpi="xrandr --output VGA1 --scale 1.25x1.25 --panning 1280x960"

In this case, the upper resolution limit of the monitor [VGA1] is 1024x768 (4:3). The screen is scaled down by 25% [--scale 1.25x1.25]. A "--panning" argument is given to allow this new area be interacted with.One of my bash aliases: written in desperation after reading the xrandr man page. For use with monitors that have archaic max resolutions.— oioiiooixiii (@oioiiooixiii) January 19, 2016

# LessDPI - reset resolution to default alias lessdpi="xrandr --output VGA1 --mode 1024x768 --scale 1x1 --panning 1024x768"xrandr man: http://www.x.org/archive/X11R7.5/doc/man/man1/xrandr.1.html

link: https://www.youtube.com/watch?v=n0A--mdo3jgSomeone made a karaoke version of "Angle Dance" https://t.co/uDTgbvpDjt pic.twitter.com/HYjEanekPN— oioiiooixiii (@oioiiooixiii) April 11, 2016

#!/bin/bash # Rudimentary script for recording X11 desktop looping through FFplay # For other platforms see: https://trac.ffmpeg.org/wiki/Capture/Desktop ffmpeg \ -video_size 1024x768 \ -framerate 25 \ -f x11grab \ -i :0+100,200 \ -c libx264 \ -preset ultrafast \ -crf 6 \ recordscreen543.mkv \ -y & pid="$!" trap "kill $pid" EXIT ffplay \ -video_size 1024x768 \ -framerate 25 \ -f x11grab \ -i :0+100,200 exitLonger video demonstrating the effect.

Ireland is still without a government; the elected can't agree. It's vital an agreement is reached soon... pic.twitter.com/TSkeI2rFXq

— oioiiooixiii (@oioiiooixiii) April 13, 2016

# Isolate motion-vectors using 'difference128' blend filter

# - add brightness, contrast, and scaling, to taste

ffplay \

-flags2 +export_mvs \

-i "video.mp4" \

-vf \

"

split[original],

codecview=mv=pf+bf+bb[vectors],

[vectors][original]blend=all_mode=difference128,

eq=contrast=7:brightness=-0.3,

scale=720:-2

"

Works best with higher-resolution videos; 4K source used in this case.

context: https://twitter.com/adamjohnsonNYC/status/715649757167951877What if, Donald Trump IS Putin? Like in a rubber mask. That's how they both can be Hitler! It all makes total sense pic.twitter.com/rugrEkVWh8

— oioiiooixiii (@oioiiooixiii) March 31, 2016