info: http://qa.digitalcollections.nypl.org/items/510d47dd-f272-a3d9-e040-e00a18064a99

ffmpeg \

-i background.png \

-i video.mkv \

-filter_complex \

"

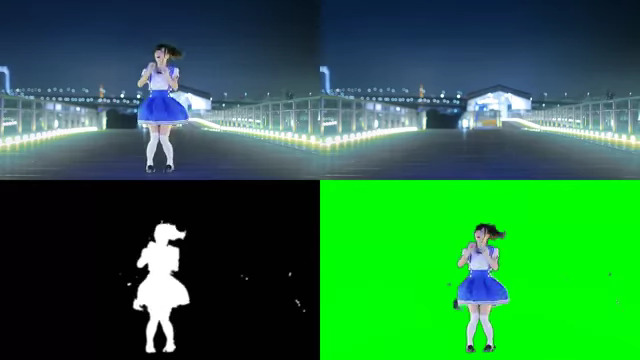

color=#00ff00:size=1280x720 [matte];

[1:0] format=rgb24, split[mask][video];

[0:0][mask] blend=all_mode=difference,

curves=m='0/0 .1/0 .2/1 1/1',

format=gray,

smartblur=1,

eq=brightness=30:contrast=3,

eq=brightness=50:contrast=2,

eq=brightness=-10:contrast=50,

smartblur=3,

format=rgb24 [mask];

[matte][video][mask] maskedmerge,format=rgb24

" \

-shortest \

-pix_fmt yuv422p \

result.mkv

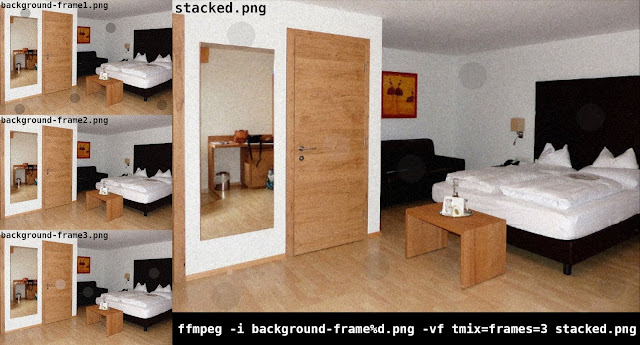

The image-stacking process is just to create a cleaner background image to work with. The idea is to remove momentary anomalies by averaging frames together. The benefits may be negligible though. Image-stacking can be done many ways. I created a quick demo for you using FFmpeg:

— oioiiooixiii (@oioiiooixiii) November 4, 2019

# Image stacking with FFmpeg usinf 'tmix' filter. # More info on 'tmix' filter: https://ffmpeg.org/ffmpeg-filters.html#tmix ffmpeg -i background-frame%d.png -vf tmix=frames=3 stacked.png # Image stacking is also possible with ImageMagick convert *.png -evaluate-sequence mean stacked.png

# Generate video motion vectors, in various colours, and merge together

# NB: Includes fixed 'curve' filters for issue outlined in blog post

ffplay \

-flags2 +export_mvs \

-i video.mkv \

-vf \

"

split=3 [original][original1][vectors];

[vectors] codecview=mv=pf+bf+bb [vectors];

[vectors][original] blend=all_mode=difference128,

eq=contrast=7:brightness=-0.3,

split=3 [yellow][pink][black];

[yellow] curves=r='0/0 0.1/0.5 1/1':

g='0/0 0.1/0.5 1/1':

b='0/0 0.4/0.5 1/1' [yellow];

[pink] curves=r='0/0 0.1/0.5 1/1':

g='0/0 0.1/0.3 1/1':

b='0/0 0.1/0.3 1/1' [pink];

[original1][yellow] blend=all_expr=if(gt(X\,Y*(W/H))\,A\,B) [yellorig];

[pink][black] blend=all_expr=if(gt(X\,Y*(W/H))\,A\,B) [pinkblack];

[pinkblack][yellorig]blend=all_expr=if(gt(X\,W-Y*(W/H))\,A\,B)

"

# Process:

# 1: Three copies of input video are made

# 2: Motion vectors are applied to one stream

# 3: The result of #2 is 'difference128' blended with an original video stream

# The brightness and contrast are adjusted to improve clarity

# Three copies of this vectors result are made

# 4: Curves are applied to one vectors stream to create yellow colour

# 5: Curves are applied to another vectors stream to create pink colour

# 6: Original video stream and yellow vectors are combined diagonally

# 7: Pink vectors stream and original vectors stream are combined diagonally

# 8: The results of #6 and #7 are combined diagonally (opposite direction)

**** Wed Jul 6 17:02:07 IST 2016 **** ARRAY VALUES: |10||||||||200|||0|||1|-50|0|||| vidstabdetect=result=transforms.trf:shakiness=10:accuracy=15:stepsize=6:mincontrast=0.3:tripod=0:show=0 vidstabtransform=input=transforms.trf:smoothing=200:optalgo=gauss:maxshift=-1:maxangle=0:crop=keep:invert=0:relative=1:zoom=-50:optzoom=0:zoomspeed=0.25:interpol=bilinear:tripod=0:debug=0 # Info: # 1: Time and date of specific filtering. The filter choices of each run on a video gets added to the same log file. # 2: Clearly shows the user specified values for filtering (blanks between '|' symbols indicate default value used) # 3: Filtergraph used for first pass # 4: Filtergraph used for second pass

# Using the FFmpeg compilation method for GNU/Linux, found here # https://trac.ffmpeg.org/wiki/CompilationGuide/Ubuntu # Add (or complete) the following to pre-compile procedures # --------------------------------------------------------- cd ~/ffmpeg_sources wget -O vid-stab-master.tar.gz https://github.com/georgmartius/vid.stab/tarball/master tar xzvf vid-stab-master.tar.gz cd *vid.stab* cmake . make sudo make install # --------------------------------------------------------- # When compiling FFmpeg, include '--enable-libvidstab' in './configure PATH' # Create necessary symlinks to 'libvidstab.so' automatically by running sudo ldconfig